Create and access NFS server from Kubernetes on WEkEO

In order to enable simultaneous read-write storage to multiple pods running on a Kubernetes cluster, we can use an NFS server.

In this guide we will create an NFS server on a virtual machine, create file share on this server and demonstrate accessing it from a Kubernetes pod.

What We Are Going To Cover

Set up an NFS server on a VM

Set up a share folder on the NFS server

Make the share available

Deploy a test pod on the cluster

Prerequisites

No. 1 Hosting

You need a WEkEO hosting account with Horizon interface https://horizon.cloudferro.com.

The resources that you require and use will reflect on the state of your account wallet. Check your account statistics at https://cf-admin.wekeo2.eu/login.

No. 2 Familiarity with Linux and cloud management

We assume you know the basics of Linux and WEkEO cloud management:

Creating, accessing and using virtual machines How to create new Linux VM in OpenStack Dashboard Horizon on WEkEO

Creating security groups How to use Security Groups in Horizon on WEkEO

Attaching floating IPs How to Add or Remove Floating IP’s to your VM on WEkEO

No. 3 A running Kubernetes cluster

You will also need a Kubernetes cluster to try out the commands. To create one from scratch, see How to Create a Kubernetes Cluster Using WEkEO OpenStack Magnum

No. 4 kubectl access to the Kubernetes cloud

As usual when working with Kubernetes clusters, you will need to use the kubectl command: How To Access Kubernetes Cluster Post Deployment Using Kubectl On WEkEO OpenStack Magnum

1. Set up NFS server on a VM

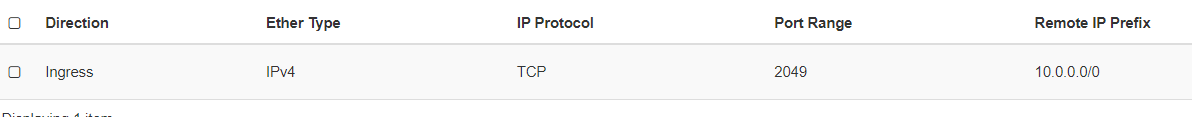

As a prerequisite to create an NFS server on a VM, first from the Network tab in Horizon create a security group allowing ingress traffic from port 2049.

Then create an Ubuntu VM from Horizon. During the Network selection dialog, connect the VM to the network of your Kubernetes cluster. This ensures that cluster nodes have access to the NFS server over private network. Then add that security group with port 2049 open.

When the VM is created, you can see that it has private address assigned. For this occasion, let the private address be 10.0.0.118. Take note of this address to later use it in NFS configuration.

Set up floating IP on the VM server, just to enable SSH to this VM.

4. Deploy a test pod on the cluster

Ensure you can access your cluster with kubectl. Have a file test-pod.yaml with the following contents:

test-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: default

spec:

containers:

- image: nginx

name: test-container

volumeMounts:

- mountPath: /my-nfs-data

name: test-volume

volumes:

- name: test-volume

nfs:

server: 10.0.0.118

path: /mnt/myshare

The NFS server block refers to private IP address of the NFS server machine, which is on our cluster network. Apply the yaml manifest with:

kubectl apply -f test-pod.yaml

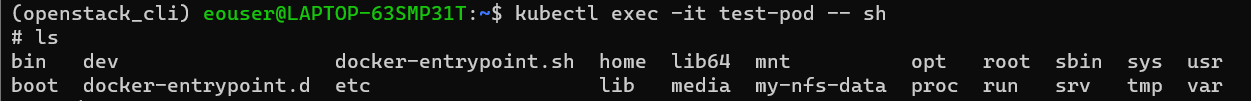

We can then enter the shell of the test-pod with the below command:

kubectl exec -it test-pod -- sh

and see that the my-nfs-data folder got mounted properly:

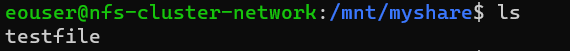

To verify, create a file testfile in this folder, then exit the container. You can then SSH back to the NFS server and verify that testfile is available in /mnt/myshare folder.